Remastering a Masterpiece

My involvement in this project started when I posted to the Wing Commander CIC that I’d created a Wing Commander IV HD Video Pack. What started as a curiosity for my own amusement really kicked off when I discovered I could get the enhanced videos to play back in-game in the GOG Release and I thought I’d share it with the community.

I’m immensely proud to say that my remastered videos were warmly welcomed by the community. Shortly after I posted them, Pedro asked if he could use them in the WCIV remaster project. Once we got to talking and I mentioned I’m a media designer by profession, he also asked if I’d be able to help build a site for the project and, well… here we are!

The WCIV HD Video Pack has since gone through many improvements and iterations, so I thought fans of the game might like a little more background into the workflow behind remastering and upscaling this modern masterpiece.

When Is Intelligence Not Intelligent?

The most important thing to keep in mind when discussing Machine Learning is that the standard of calling it “Artificial Intelligence” is quite misleading. Most things we refer to in modern parlance as AI aren’t actually “intelligent” – it’s just a term used because Neural Networks are utilised to allow software to “learn” to process data without being directly programmed by a human being.

In the case of our upscaled videos, a NN is “trained” to recognise how shapes, colours, curves etc. should look when you resize them. Massively powerful GPU-based systems are left to run trial-and-error comparisons, checking input against output, comparing that to larger source materials, keeping results that match closely and discarding results that don’t. The longer you leave this routine running, the more your NN “learns” and the more accurate results you get when you push data through it.

Once the decision is made that a NN has run long enough to produce consistent results, the program can be captured in an AI model and applied to a commercial application.

GIGO – Quality Is King

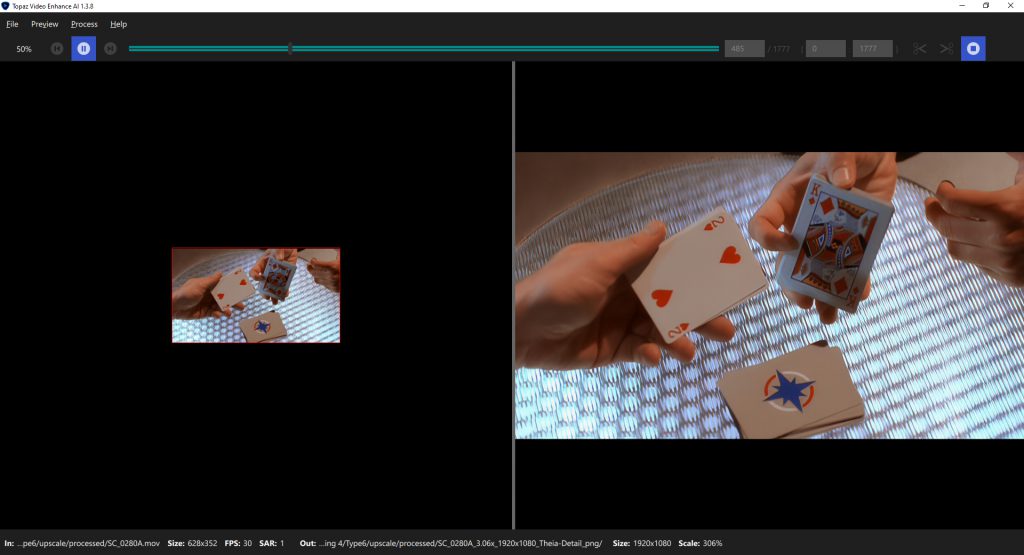

In my case, I use a NN-trained application called Topaz Labs Video Enhance AI. The application has been loaded with several AI models that can be applied to source materials of various quality with varying results.

Almost as a case-in-point of why “Artificial Intelligence” is a misleading term, the classic programming adage GIGO – Garbage In, Garbage Out – really applies here. The application itself is not actually intelligent. It can’t look at a piece of footage like we can and think, “oh, that’s a ball. I imagine if it were bigger, the curve around the edge would look like this and the light would fall across it like that”. It’s still software, and software is stupid. It can only do what it’s been specifically programmed to do – it’s just that in this case, it hasn’t been entirely programmed by people.

The result is that you’ll always get better upscale results from higher-quality source footage. Lucky for us that Wing Commander IV’s FMV was all filmed on high-grade 35mm film, under professional lighting and, crucially, eventually released as DVD video. While the resolution of the DVD footage isn’t up to modern standards, the quality and visual fidelity of the footage is relatively high. So GIGO doesn’t apply – our low resolution footage is quite high quality.

Upscaling Is Just One End of the Process

All that said, I don’t just take the DVD video and run it through Topaz Video Enhance AI. I do quite a lot of work to prepare the footage for upscale first. For a start, the footage is interlaced – every other line of the image in encoded for every other frame. If you play that back on a modern system, you get nasty combing artefacts – and the AI model would see that and try to maintain it.

On top of that, video standards are incredibly complicated. Frame rates, resolutions, colour-space profiles – all these things need to be correctly configured to squeeze the most quality possible out of a video file.

Here Comes the Technobabble

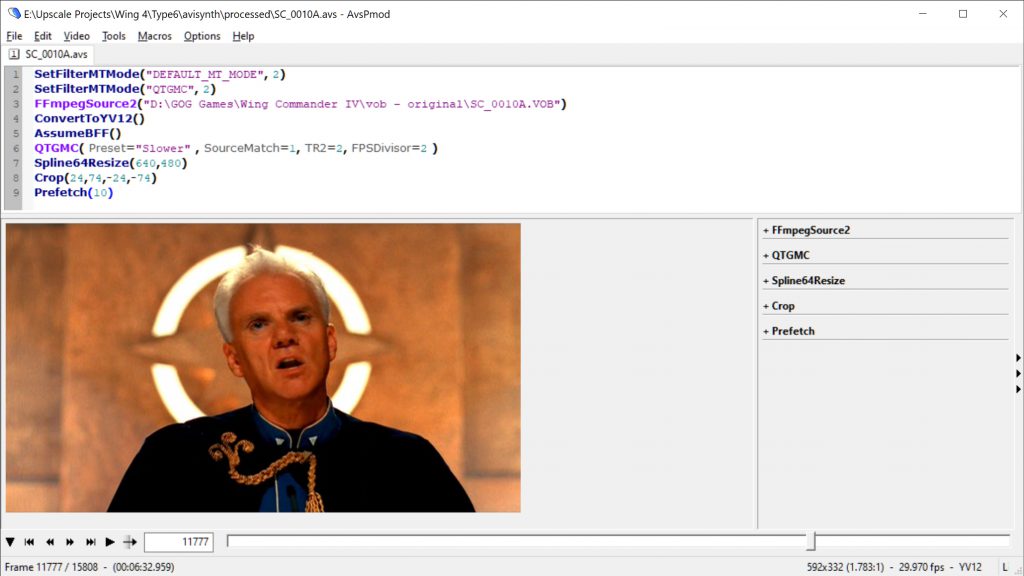

I write AVISynth routines to prepare the footage for the AI upscale process, then run them through FFMPEG to render the results. Specifically, I use AVISynth to capture the original footage, de-interlace it, crop out the letterbox bars from the 4:3 image to produce a 16:9 sequence, convert the colour-space to YUV12 and standardise the frame rate.

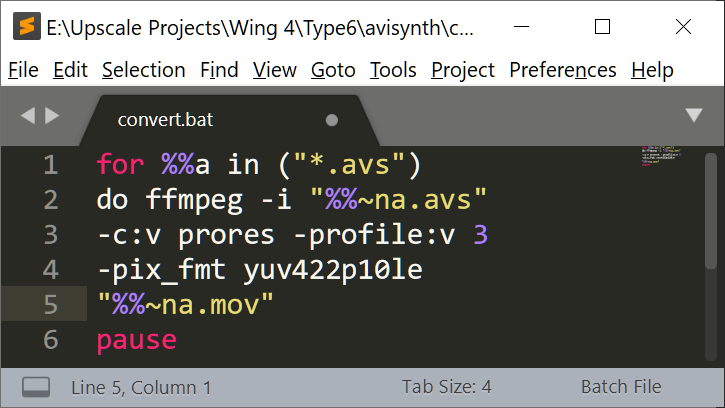

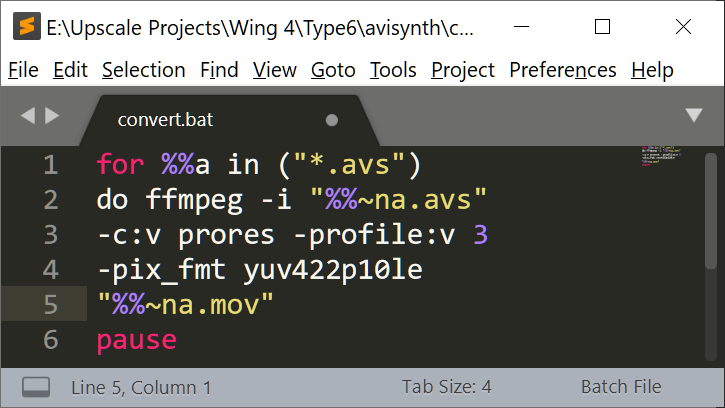

I then write a custom command-line batch file to run the AVISynth script through FFMPEG and export a QuickTime ProRes MOV video file. This video file is then run through Topaz Video Enhance AI, which upscales each individual frame to a 1080p HD PNG image.

But we’re still not done. The images produced by the AI software are very clean – almost too clean. 130 years of cinema has trained our brains to expect little imperfections in the image – specifically in the form of film grain. Counter-intuitively, a 100% clean image doesn’t look right to us. A little granularity in the image makes us feel like we’re seeing more detail. So, I return to AVISynth, writing a new script to gather all the PNG images, add artificial film grain and combine them into a video sequence. I then go back to FFMPEG to encode that sequence into yet another QuickTime ProRes video file.

But we’re still not done! We now have a massive HD video file (21GB for the WCIV intro video alone!) with no audio. The final stage is to again use FFMPEG to combine that video with the Dolby 5.1 surround sound audio track from the original DVD file and optimise the video using h.264 encoding into a final, optimised video file.

Overall, it’s quite a lengthy process!